Amazon MLS-C01 Real Exam Questions

The questions for MLS-C01 were last updated at Apr 15,2025.

- Exam Code: MLS-C01

- Exam Name: AWS Certified Machine Learning - Specialty

- Certification Provider: Amazon

- Latest update: Apr 15,2025

An office security agency conducted a successful pilot using 100 cameras installed at key locations within the main office. Images from the cameras were uploaded to Amazon S3 and tagged using Amazon Rekognition, and the results were stored in Amazon ES. The agency is now looking to expand the pilot into a full production system using thousands of video cameras in its office locations globally. The goal is to identify activities performed by non-employees in real time.

Which solution should the agency consider?

- A . Use a proxy server at each local office and for each camera, and stream the RTSP feed to a unique Amazon Kinesis Video Streams video stream. On each stream, use Amazon Rekognition Video and create a stream processor to detect faces from a collection of known employees, and alert when non- employees are detected.

- B . Use a proxy server at each local office and for each camera, and stream the RTSP feed to a unique Amazon Kinesis Video Streams video stream. On each stream, use Amazon Rekognition Image to detect faces from a collection of known employees and alert when non-employees are detected.

- C . Install AWS DeepLens cameras and use the DeepLens_Kinesis_Video module to stream video to Amazon Kinesis Video Streams for each camera. On each stream, use Amazon Rekognition Video and create a stream processor to detect faces from a collection on each stream, and alert when nonemployees are detected.

- D . Install AWS DeepLens cameras and use the DeepLens_Kinesis_Video module to stream video to Amazon Kinesis Video Streams for each camera. On each stream, run an AWS Lambda function to capture image fragments and then call Amazon Rekognition Image to detect faces from a collection of known employees, and alert when non-employees are detected.

A manufacturing company has structured and unstructured data stored in an Amazon S3 bucket A Machine Learning Specialist wants to use SQL to run queries on this data.

Which solution requires the LEAST effort to be able to query this data?

- A . Use AWS Data Pipeline to transform the data and Amazon RDS to run queries.

- B . Use AWS Glue to catalogue the data and Amazon Athena to run queries

- C . Use AWS Batch to run ETL on the data and Amazon Aurora to run the quenes

- D . Use AWS Lambda to transform the data and Amazon Kinesis Data Analytics to run queries

A Data Scientist is working on an application that performs sentiment analysis. The validation accuracy is poor and the Data Scientist thinks that the cause may be a rich vocabulary and a low average frequency of words in the dataset

Which tool should be used to improve the validation accuracy?

- A . Amazon Comprehend syntax analysts and entity detection

- B . Amazon SageMaker BlazingText allow mode

- C . Natural Language Toolkit (NLTK) stemming and stop word removal

- D . Scikit-learn term frequency-inverse document frequency (TF-IDF) vectorizers

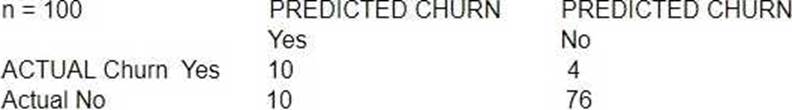

A large mobile network operating company is building a machine learning model to predict customers who are likely to unsubscribe from the service. The company plans to offer an incentive for these customers as the cost of churn is far greater than the cost of the incentive.

The model produces the following confusion matrix after evaluating on a test dataset of 100 customers:

Based on the model evaluation results, why is this a viable model for production?

- A . The model is 86% accurate and the cost incurred by the company as a result of false negatives is less than the false positives.

- B . The precision of the model is 86%, which is less than the accuracy of the model.

- C . The model is 86% accurate and the cost incurred by the company as a result of false positives is less than the false negatives.

- D . The precision of the model is 86%, which is greater than the accuracy of the model.

A company’s Machine Learning Specialist needs to improve the training speed of a time-series forecasting model using TensorFlow. The training is currently implemented on a single-GPU machine and takes approximately 23 hours to complete. The training needs to be run daily.

The model accuracy js acceptable, but the company anticipates a continuous increase in the size of the training data and a need to update the model on an hourly, rather than a daily, basis. The company also wants to minimize coding effort and infrastructure changes

What should the Machine Learning Specialist do to the training solution to allow it to scale for future demand?

- A . Do not change the TensorFlow code. Change the machine to one with a more powerful GPU to speed up the training.

- B . Change the TensorFlow code to implement a Horovod distributed framework supported by Amazon SageMaker. Parallelize the training to as many machines as needed to achieve the business goals.

- C . Switch to using a built-in AWS SageMaker DeepAR model. Parallelize the training to as many machines as needed to achieve the business goals.

- D . Move the training to Amazon EMR and distribute the workload to as many machines as needed to achieve the business goals.

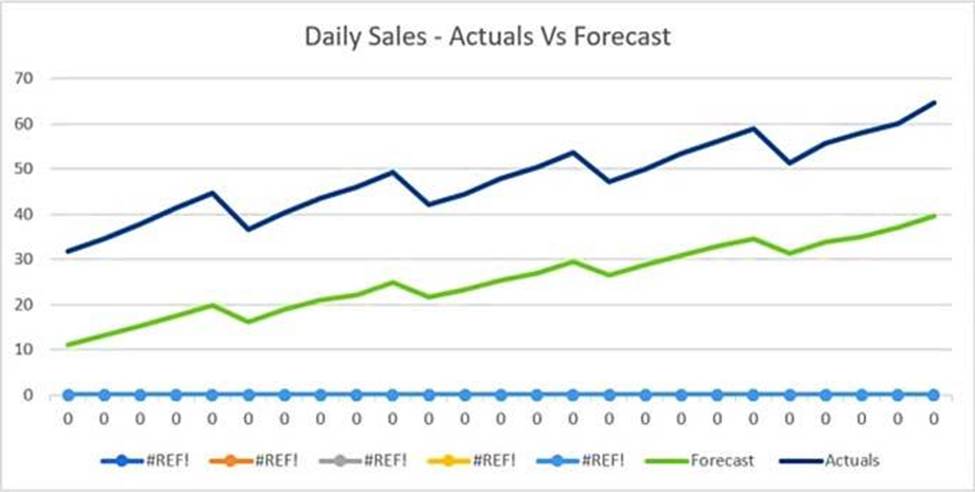

The displayed graph is from a foresting model for testing a time series.

Considering the graph only, which conclusion should a Machine Learning Specialist make about the behavior of the model?

- A . The model predicts both the trend and the seasonality well.

- B . The model predicts the trend well, but not the seasonality.

- C . The model predicts the seasonality well, but not the trend.

- D . The model does not predict the trend or the seasonality well.

A Machine Learning Specialist is building a supervised model that will evaluate customers’ satisfaction with their mobile phone service based on recent usage. The model’s output should infer whether or not a customer is likely to switch to a competitor in the next 30 days.

Which of the following modeling techniques should the Specialist use1?

- A . Time-series prediction

- B . Anomaly detection

- C . Binary classification

- D . Regression

A Marketing Manager at a pet insurance company plans to launch a targeted marketing campaign on social media to acquire new customers

Currently, the company has the following data in Amazon Aurora

• Profiles for all past and existing customers

• Profiles for all past and existing insured pets

• Policy-level information

• Premiums received

• Claims paid

What steps should be taken to implement a machine learning model to identify potential new customers on social media?

- A . Use regression on customer profile data to understand key characteristics of consumer segments Find similar profiles on social media.

- B . Use clustering on customer profile data to understand key characteristics of consumer segments

Find similar profiles on social media. - C . Use a recommendation engine on customer profile data to understand key characteristics of consumer segments. Find similar profiles on social media

- D . Use a decision tree classifier engine on customer profile data to understand key characteristics of consumer segments. Find similar profiles on social media

A Machine Learning team uses Amazon SageMaker to train an Apache MXNet handwritten digit classifier model using a research dataset. The team wants to receive a notification when the model is overfitting. Auditors want to view the Amazon SageMaker log activity report to ensure there are no unauthorized API calls.

What should the Machine Learning team do to address the requirements with the least amount of code and fewest steps?

- A . Implement an AWS Lambda function to long Amazon SageMaker API calls to Amazon S3. Add code to push a custom metric to Amazon CloudWatch. Create an alarm in CloudWatch with Amazon SNS to receive a notification when the model is overfitting.

- B . Use AWS CloudTrail to log Amazon SageMaker API calls to Amazon S3. Add code to push a custom metric to Amazon CloudWatch. Create an alarm in CloudWatch with Amazon SNS to receive a notification when the model is overfitting.

- C . Implement an AWS Lambda function to log Amazon SageMaker API calls to AWS CloudTrail. Add code to push a custom metric to Amazon CloudWatch. Create an alarm in CloudWatch with Amazon SNS to receive a notification when the model is overfitting.

- D . Use AWS CloudTrail to log Amazon SageMaker API calls to Amazon S3. Set up Amazon SNS to receive a notification when the model is overfitting.

A Machine Learning Specialist is assigned a TensorFlow project using Amazon SageMaker for training, and needs to continue working for an extended period with no Wi-Fi access.

Which approach should the Specialist use to continue working?

- A . Install Python 3 and boto3 on their laptop and continue the code development using that environment.

- B . Download the TensorFlow Docker container used in Amazon SageMaker from GitHub to their local environment, and use the Amazon SageMaker Python SDK to test the code.

- C . Download TensorFlow from tensorflow.org to emulate the TensorFlow kernel in the SageMaker environment.

- D . Download the SageMaker notebook to their local environment then install Jupyter Notebooks on their laptop and continue the development in a local notebook.